When conducting a dissertation, especially one involving quantitative research, gathering and analyzing primary data is often one of the most challenging but crucial steps. Quantitative data allows for the statistical evaluation of hypotheses, offering measurable evidence that can validate or refute theoretical claims. However, the process of collecting and analyzing quantitative data can be intimidating for novice researchers. Improvisation is sometimes necessary when limitations such as time, budget, or accessibility to respondents come into play.

This article will provide a comprehensive guide to improvising quantitative primary data for your dissertation, focusing on the key stages from developing a questionnaire to performing analysis in Excel or SPSS. This approach will assist you in maintaining the integrity of your research, even if the

data collection process must be adjusted due to practical constraints.

Introduction

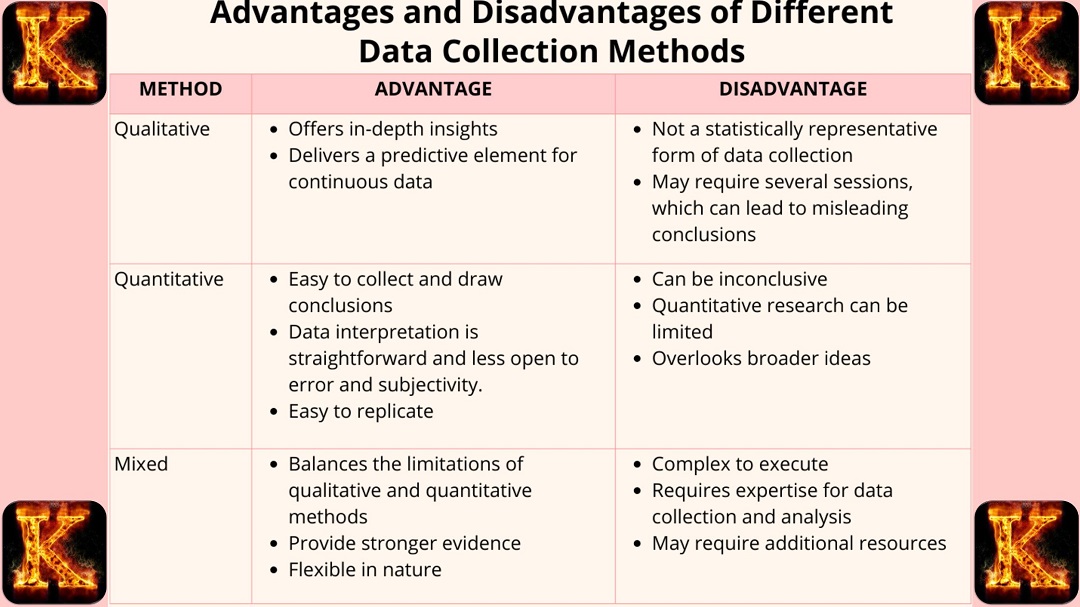

Quantitative research aims to quantify variables, test hypotheses, and establish statistical relationships between them. Primary data is typically collected through methods such as surveys, experiments, or structured interviews. When designed and executed correctly, primary quantitative data collection can yield accurate, reliable, and objective insights.

In some cases,

researchers may face limitations in collecting primary data, such as insufficient time to survey a large population, difficulty accessing the right sample, or other logistical barriers. When these obstacles arise, improvisation can be a valuable tool. However, maintaining methodological rigor while making practical adjustments is crucial to ensure that the integrity and validity of the research are not compromised.

Literature Review

The importance of primary data collection in quantitative research has been widely discussed in academic literature. According to Creswell (2014), primary data offers the opportunity to tailor data collection directly to the

research objectives, ensuring that the results are highly relevant to the study’s hypotheses. Quantitative data collection through structured instruments like questionnaires allows researchers to gather large amounts of data, often in a short period, and analyze it with statistical software such as SPSS or Excel.

However, improvisation in data collection is not uncommon, particularly in social sciences. Hammersley and Atkinson (2019) argue that improvisation in data collection can be part of adaptive research strategies that account for real-world constraints. It can help ensure that the research moves forward, albeit with modifications to the data collection process. Improvised approaches must still adhere to rigorous standards of reliability and validity to produce meaningful outcomes (Flick, 2018).

Developing a Questionnaire

A well-designed questionnaire is crucial for collecting valid quantitative data. The first step is to identify your research questions and hypotheses. Based on these, you can develop questions that measure the variables you are interested in. These questions should be clear, concise, and focused on quantifiable aspects of the research topic.

For example, if your dissertation focuses on consumer behavior, you might include questions like:

- On a scale of 1 to 5, how satisfied are you with product X?

- How frequently do you purchase product X?

- How likely are you to recommend product X to others?

Once you’ve designed the questionnaire, you can administer it to your sample population either online or in person. If you face challenges with respondent availability or

sample size, consider making adjustments, such as targeting a smaller but more relevant sample or using online survey tools to increase accessibility.

Creating Columns in Excel or SPSS

After collecting your data, the next step is to organize it in a format that can be analyzed easily. Tools like Excel and SPSS are widely used for quantitative data analysis. In Excel, you can create columns that correspond to the different variables in your questionnaire. For example:

| Respondent ID |

Satisfaction (1-5) |

Frequency of Purchase |

Likelihood to Recommend |

| 1 |

4 |

2 |

5 |

| 2 |

3 |

3 |

4 |

In SPSS, similar variables are entered into the data view, and the variable view allows for customization of the types of data and coding used (e.g., scale, nominal). Once the data is organized, you can begin the

process of analysis.

Predetermined Hypotheses and Expected Results

Based on your hypothesis, you may have predetermined expectations for how you think the data will turn out. For instance, if your hypothesis is that higher satisfaction with a product leads to more frequent purchases, you might expect to see a positive correlation between satisfaction scores and frequency of purchase. This gives you a framework for analyzing the data once it is collected.

Filling Out the Spreadsheet Variable by Variable

Once you have a clear idea of the variables and hypotheses, the next crucial step is to begin organizing the data by filling out the spreadsheet, whether you’re using Excel, SPSS, or another data management tool. In this phase, each row will represent an individual respondent, while each column corresponds to a specific variable from your questionnaire. Accurately inputting the data ensures you can perform a thorough statistical analysis later, based on the hypotheses you aim to test.

Step 1: Identifying Variables and Structure

The first step in filling out the spreadsheet is to clearly identify the variables you are working with. These could include dependent, independent, or control variables, depending on the design of your study. For instance, if your research is examining customer satisfaction, potential variables might include:

- Satisfaction level (measured on a Likert scale from 1 to 5)

- Frequency of product use (e.g., number of times per week)

- Age (grouped into age ranges or recorded as a continuous variable)

- Income level (categorized or continuous)

Each variable will be assigned its own column, with every respondent occupying a row. For example:

| Respondent ID |

Satisfaction Level |

Frequency of Use |

Age Group |

Income Level |

| 1 |

4 |

5 |

30-39 |

$40,000-$50,000 |

| 2 |

3 |

3 |

20-29 |

$30,000-$40,000 |

Step 2: Inputting Data

Input the responses you gathered from your survey into the corresponding cells of your spreadsheet. This manual process can take time, especially for large datasets, so accuracy is essential. Double-check that each value is entered in the correct column to avoid introducing errors that could skew your results.

- Nominal data (e.g., gender, region, or occupation) should be entered according to their assigned categories or numerical codes.

- Ordinal data (e.g., satisfaction levels on a scale) should maintain their rank-ordered structure.

- Continuous data (e.g., age, income) should be entered as raw numbers for precise analysis.

For instance, in SPSS, you can create variable labels and set the data type (e.g., scale, nominal, ordinal), while in Excel, each variable can be represented as a separate column.

Step 3: Handling Missing or Incomplete Data

In real-world research, it’s common to encounter missing or incomplete data, whether respondents skipped certain questions or provided incomplete answers. How you handle missing data will impact the accuracy of your analysis, so it’s important to choose an appropriate method.

Techniques to Handle Missing Data:

- Listwise Deletion: If a respondent has missing data on one or more variables, you can remove their entire row from the dataset. While this method is simple, it can reduce your sample size and potentially bias your results if the missing data is not random.

- Pairwise Deletion: Instead of removing the entire respondent’s data, pairwise deletion allows you to exclude only the missing responses while retaining the rest of the valid data. This method is preferable when only a few variables are missing and can help preserve the overall sample size.

- Imputation: Imputation methods involve filling in missing values with substituted estimates, such as the mean, median, or mode of the variable, or using more advanced techniques like regression imputation or multiple imputation. While this can improve the completeness of your data, it introduces assumptions that must be carefully documented in your methodology.

Regardless of which method you choose, it’s essential to document how missing or incomplete data was handled in your dissertation. Clearly explaining the rationale behind your decision will add transparency to your research process.

Step 4: Documenting the Process

Throughout the data input process, it is crucial to maintain a detailed record of how each variable was coded and any decisions made regarding missing data. In your

methodology chapter, document the following:

- How each variable was operationalized and coded in your spreadsheet

- Any adjustments made to the dataset, such as recoding or transformation of variables

- Techniques used to address missing data and why they were chosen

- Any assumptions made during the data entry process

By ensuring each of these elements is clearly documented, you’ll make it easier to replicate or validate your study in the future. Additionally, proper documentation improves the credibility of your findings, as your data handling procedures are transparent and aligned with standard research practices.

Filling out the spreadsheet correctly is a critical step that forms the foundation for all subsequent data analysis. Once the data is structured and cleaned, you’ll be in an excellent position to perform statistical tests and draw meaningful conclusions from your research.

Performing the Analysis

Once the data has been inputted into your spreadsheet, the next crucial step is to perform statistical analysis. This is where you begin to examine the relationships between variables, test your hypotheses, and derive insights from the data. The type of analysis you perform will depend on the nature of your data, the research questions, and the specific hypotheses you aim to test.

Statistical analysis allows you to identify patterns, measure the strength of relationships between variables, and determine the significance of your findings.

Step 1: Running Descriptive Statistics

Before diving into more complex analyses, it’s essential to start with

descriptive statistics. Descriptive statistics help summarize and describe the basic features of your data, providing a snapshot of the dataset’s central tendencies and variability. Key measures include:

- Mean (average): Provides the central value of your data, often used for continuous variables like age or income.

- Median: The middle value in your data set, useful for understanding the distribution when outliers are present.

- Mode: The most frequent value, typically used for categorical variables.

- Standard deviation: Indicates how spread out the values are around the mean. A high standard deviation suggests greater variability.

- Frequency distribution: Shows how often each value appears, particularly useful for nominal or ordinal variables.

In

Excel, you can use the Data Analysis Toolpak to calculate descriptive statistics. In

SPSS, you can go to "Analyze" > "Descriptive Statistics" > "Frequencies" or "

Descriptives" to compute these values.

For example, if you're analyzing customer satisfaction levels (rated on a 1 to 5 scale), you can calculate the mean satisfaction score and standard deviation to get a sense of how satisfied customers are overall and how varied their responses are.

Step 2: Inferential Statistics

After running descriptive statistics, you can proceed to

inferential statistics, which allow you to make conclusions about the population based on your sample data. This typically involves testing hypotheses, determining relationships between variables, and exploring causal relationships. Common techniques include:

- Correlation Analysis: Measures the strength and direction of the relationship between two continuous variables. For example, you might analyze the correlation between customer satisfaction and frequency of purchase. A positive correlation indicates that as satisfaction increases, so does purchase frequency.

- Regression Analysis: Determines the effect of one or more independent variables on a dependent variable. If you want to assess whether satisfaction, age, and income level predict the likelihood of repeat purchases, you could use multiple regression analysis to model this relationship.

- ANOVA (Analysis of Variance): Compares the means of three or more groups to see if there is a statistically significant difference between them. For example, you could use ANOVA to compare the satisfaction levels of customers from different age groups to determine if age impacts satisfaction.

- t-tests: Compare the means of two groups. A t-test might be used to determine whether there’s a significant difference in satisfaction between male and female respondents.

- Chi-Square Test: Determines whether there’s a significant association between two categorical variables. If your study involves examining whether gender is associated with the likelihood of recommending a product, a chi-square test would be appropriate.

In

SPSS, you can run these analyses under the “Analyze” menu. For regression, go to "Analyze" > "Regression" > "Linear" or "Logistic." For correlation, you can choose "

Analyze" > "Correlate" > "Bivariate." In

Excel, the Data Analysis Toolpak provides functionality for running t-tests, ANOVA, and regression.

Step 3: Testing the Hypotheses

Once you’ve chosen the appropriate statistical tests based on your research question and data type, you can begin testing your hypotheses. Each hypothesis typically has two forms:

- Null Hypothesis (H0): States that there is no relationship or difference between the variables being tested. For example, "There is no significant correlation between customer satisfaction and frequency of purchase."

- Alternative Hypothesis (H1): States that there is a relationship or difference. For instance, "There is a significant positive correlation between customer satisfaction and frequency of purchase."

After performing the test, you will obtain a

p-value, which tells you whether the results are statistically significant. If the p-value is less than your chosen significance level (usually 0.05), you reject the null hypothesis in favor of the alternative. For example, if you find a p-value of 0.03 for the correlation between satisfaction and purchase frequency, you would reject the null hypothesis and conclude that the two variables are significantly correlated.

Step 4: Interpreting Results

After the statistical tests are performed, the next step is to interpret the results. Focus on the following key points:

- Effect size: Beyond statistical significance, how strong is the relationship between variables? For correlation, the effect size is indicated by the correlation coefficient (r), where values close to 1 or -1 represent strong relationships.

- Significance: Were your findings statistically significant? A p-value less than 0.05 usually indicates a significant result, suggesting that the observed relationship or difference is unlikely to have occurred by chance.

- Model fit: For regression analysis, check the R-squared value, which explains how much variance in the dependent variable is accounted for by the independent variables. A higher R-squared means a better model fit.

Adjusting the Results and Interpreting the Findings

The process of analyzing quantitative data doesn’t always go as expected, and results may not align with your initial hypotheses. At this point, flexibility is key. After running your initial tests, you may discover that the relationships between variables are weak or insignificant. This is where improvisation and adjusting your approach come into play. Rather than viewing unexpected results as a setback, see them as an opportunity to explore alternative angles or refine your understanding of the data.

Step 1: Re-evaluating the Hypotheses

If your results show no significant relationships between key variables, the first step is to reconsider your hypotheses. Ask yourself:

- Are the original assumptions accurate? Could there be underlying factors you haven’t accounted for?

- Is there a need for additional variables? Sometimes important variables that explain the relationship may have been omitted. Adding these variables can improve the model’s predictive power.

For example, if the correlation between satisfaction and purchase frequency isn’t significant, you might consider whether

demographic factors, such as age or income, act as moderators. In this case, running an interaction analysis or including demographic controls in your regression model could reveal hidden relationships.

Step 2: Testing Alternative Models

When your results don’t support the original hypotheses, it might be helpful to test alternative statistical models. There are several ways to approach this:

- Introducing Interaction Terms: If you suspect that certain variables interact with each other (e.g., satisfaction might affect purchase frequency differently depending on income level), introducing interaction terms into your regression can capture this effect. This will help you understand how two or more variables jointly impact the dependent variable.

- Exploring Non-linear Relationships: Sometimes relationships between variables aren’t linear. For example, satisfaction might increase purchase frequency up to a point, after which it plateaus. In such cases, non-linear regression models can help capture more complex patterns.

- Transforming Variables: If your variables are not normally distributed, this can affect the results of parametric tests. Logarithmic transformations or square roots can sometimes normalize skewed data, allowing for more reliable analysis.

Step 3: Handling Outliers

Outliers—extreme data points that don’t follow the general trend—can skew your results and obscure relationships between variables. Identifying and handling outliers is crucial to improving the validity of your analysis. There are two main ways to address outliers:

- Remove outliers: This approach is useful if the outliers result from data entry errors or represent cases that are not typical of the population. However, be cautious when removing outliers, as they may contain valuable information.

- Winsorization: Rather than removing outliers, you can adjust the extreme values to bring them closer to the rest of the data. This preserves the data structure while reducing the influence of extreme values on your analysis.

Step 4: Adjusting for Missing Data

Missing data can compromise the accuracy of your analysis. If you notice gaps in your dataset, there are several ways to address this issue:

- Listwise Deletion: Remove respondents with missing data entirely from the analysis. This is appropriate when the amount of missing data is minimal and random.

- Imputation: Estimate and fill in missing values based on other information in your dataset. Imputation methods can be simple (e.g., using the mean or median) or complex (e.g., regression-based imputation).

Documenting how you handle missing data is essential for transparency and ensuring the integrity of your findings.

Step 5: Statistical Power and Sample Size

If your results are not statistically significant, consider whether your sample size was large enough to detect an effect. A small sample size may lead to low statistical power, which reduces the likelihood of detecting significant results even when relationships exist. If possible, increase your sample size by collecting more data to improve the robustness of your analysis.

Alternatively, you might conduct a

post-hoc power analysis to assess whether the sample size was sufficient to test the hypotheses accurately.

Step 6: Revisiting the Literature

As you adjust your analysis, revisiting the literature can provide valuable insights into why certain relationships might not have appeared significant. Existing studies might suggest:

- Alternative models that have been successful in explaining similar phenomena.

- Contradictory findings that could provide an explanation for your own results.

- New theories or variables that could better explain the relationships in your data.

By comparing your results to the findings of previous research, you can contextualize any discrepancies or unexpected outcomes, and build a stronger discussion in your dissertation.

Step 7: Interpreting Findings

Once you've adjusted your analysis, it’s time to interpret the results in the context of your study. Key elements to focus on include:

- Statistical significance: Were your results significant? A p-value below 0.05 typically indicates statistical significance, but make sure to also consider effect size and practical significance.

- Strength and direction of relationships: For correlations and regression analysis, examine the strength (correlation coefficient or beta weight) and direction (positive or negative) of relationships between variables.

- Consistency with hypotheses: Did the findings align with your original hypotheses? If so, explain how they confirm your expectations. If not, explore possible explanations for the divergence, such as the presence of confounding variables or limitations in data collection.

Step 8: Using Qualitative or Secondary Data for Support

If your quantitative data analysis yields mixed or inconclusive results, you may supplement your interpretation with

qualitative or secondary data. Qualitative data, such as interview responses or open-ended survey questions, can provide context to explain why certain relationships didn’t emerge as expected.

Similarly,

secondary data from previous studies can help strengthen your argument. For instance, if your quantitative findings on customer satisfaction and purchase frequency don’t show a significant relationship, but existing literature supports a link, you can discuss possible limitations in your sample or methodology that may have influenced the results.

Step 9: Reporting Limitations and Making Adjustments

It’s important to acknowledge any limitations in your analysis, such as small sample size, missing data, or lack of control over certain variables. These limitations help readers understand the constraints of your study and provide transparency.

If necessary, adjust your hypotheses or refine your

research questions based on the results. Your dissertation should demonstrate that even when unexpected outcomes arise, you’re capable of analyzing the data thoughtfully and adapting your approach to arrive at meaningful conclusions.

Rejecting or Not Rejecting the Null Hypothesis

At the conclusion of your analysis, you will either reject or fail to reject the null hypothesis, depending on the statistical significance of the results. For example, if your p-value is less than the chosen significance level (usually 0.05), you can reject the null hypothesis and accept that a relationship exists between the variables. If the p-value is higher, you fail to reject the null hypothesis, meaning the data does not provide sufficient evidence to support the hypothesis.

Discussion

In the

discussion section, you should interpret your findings in relation to the literature and, where applicable, qualitative data. If you have access to secondary or qualitative primary data, refer to it to provide a broader context for your findings. For example, if your quantitative data suggests that satisfaction doesn’t significantly impact purchase frequency, you might explore qualitative insights that suggest other factors, such as brand loyalty or price sensitivity, play a more prominent role.

Furthermore, your discussion should acknowledge the limitations of your study, including any improvisations in data collection or analysis, and suggest avenues for future research.

Conclusion

Improvising quantitative primary data for a dissertation can be a practical solution to real-world challenges in research. By developing a clear questionnaire, organizing your data in tools like Excel or SPSS, and adjusting your analysis as needed, you can ensure that your research maintains methodological rigor, even when faced with constraints. Through careful interpretation and integration of secondary and

qualitative data, you can produce meaningful insights that contribute to your field of study.

Comments are closed!